Introduction, statistics review

Class overview

Machine learning & data-driven modeling in bioengineering

Lecture:

- Mondays/Wednesdays, 10–11:50 am

- Mong Learning Center

Lab

- Varies by section—check the course schedule.

Lecture Slides

- Lecture slides will be posted on the course website.

- Finalized by the night before so you can print them out if you want.

- The slides are not everything, but will include space to fill out missing elements during class.

Textbook / Other Course Materials

- There is no textbook for this course.

- I will post related readings prior to each lecture.

- These will either broaden the scope of material covered in class, or provide critical background.

- I will make it clear if the material is more than optional.

Support / Office Hours

Prof. Meyer

- Thursday, 9–9:50 am on Zoom

- I will usually stick around after class and am happy to answer questions

TAs

- By appointment

- Will have regular times setup soon

Campuswire

- Allows for quick help from me & the TAs

Learning Goals:

By the end of the course you will have an increased understanding of:

- Critical Thinking and Analysis: Understand the process of identifying critical problems, analyzing current solutions, and determining alternative successful solutions.

- Engineering Design: Apply mathematical and scientific knowledge to identify, formulate, and solve problems in the chosen design area.

- Computational Modeling: Apply computational tools to solve and optimize engineering problems.

- Communicate Effectively: Learn how to give an effective presentation. Understand how to communicate progress orally and in written reports.

- Manage and Work in Teams: Learn to work and communicate effectively with peers to attain a common goal.

Practical Learning Objectives

By the end of the course you will learn how to:

- Identify whether and how a problem can be solved by machine learning.

- Determine the prerequisites to applying the method.

- Implement different techniques to solve the scientific/engineering task.

- Critically assess machine learning results.

Grade Breakdown

- 25%

- Final Project

- 25%

- Lab Assignments

- 40%

- Midterms 1 & 2

- 10%

- Class Participation

Labs

Sessions

- These are mandatory sessions.

- You will have an opportunity to get started on each week’s implementation and/or work on your project.

Computational Labs

- You will implement what we have learned using real data from studies.

- Reinforces the material with hands-on experience by implementing what we learn in class.

- These are meant to challenge you to become comfortable applying the material.

- Document your effort. Get started early. Seek answers to your questions in office hours, in lab, or on Campuswire!

- Your lowest grade will be automatically dropped.

Project

- You will take data from a scientific paper, and implement a machine learning method using best practices.

- A list of papers and data repositories is provided on the website as suggestions.

- Absolutely go find ideas that interest you!

- More details to come.

- First deadline will be in week 6 to pick a project topic.

Exams

- We will have midterm exams on weeks 5 and 10.

- You will have a final project in lieu of a final exam.

Keys to Success

- Participate in an engaged manner with in-class and take-home activities.

- Turn assignments in on time.

- Work through activities, reading, and problems to ensure your understanding of the material.

If you do these three things, you will do well.

Introduction

How do we need to learn about the world?

- What is a measurement?

- What is a model?

Three things we need to learn about the world

- Measurements (data)

- Models (inference)

- Algorithms

Area of Focus

What we will cover spans a range of fields:

- Engineering (the data)

- Computational techniques (the algorithms)

- Statistics (the model)

Why do we need models to learn about the world?

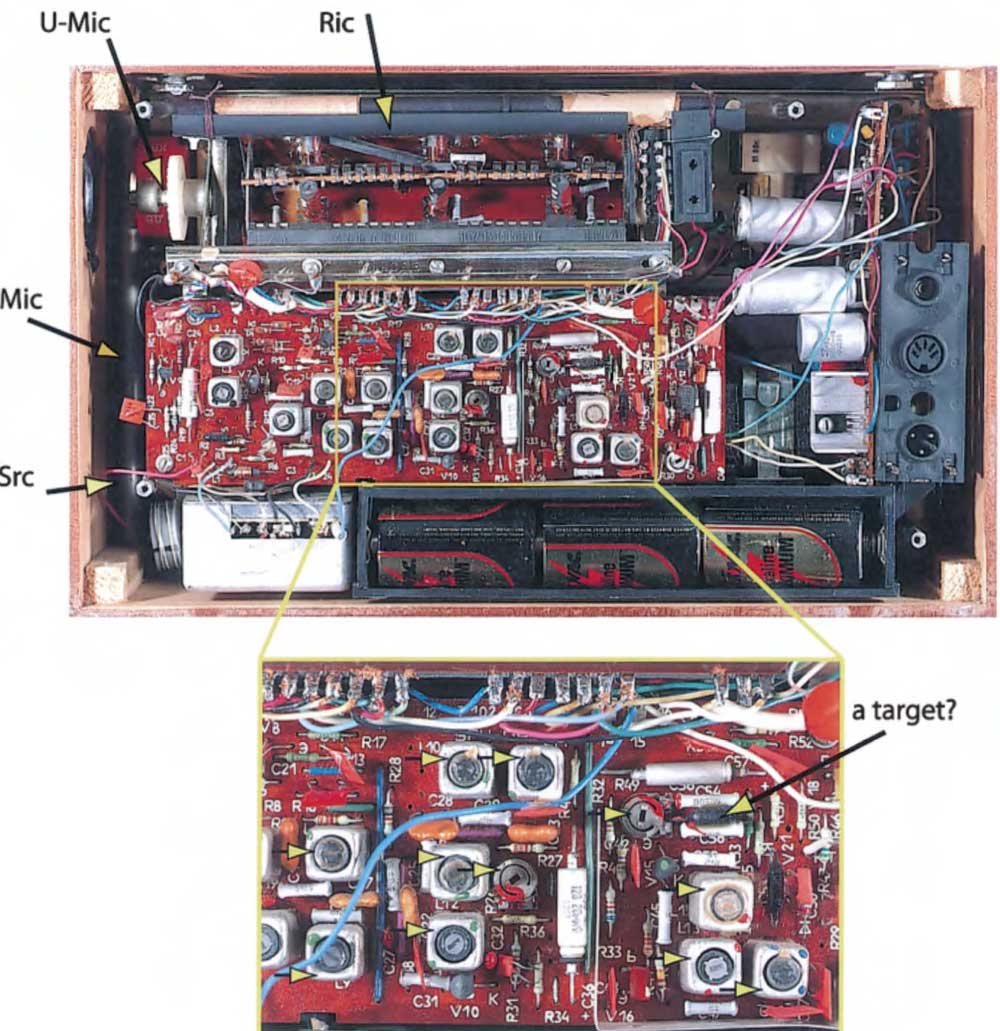

Why we need models - Can a biologist fix a radio?

Lazebnik et al, Cancer Cell, 2002

Why we need models - Can a biologist fix a radio?

Lazebnik et al, Cancer Cell, 2002

Why we need models - Can a biologist fix a radio?

Lazebnik et al, Cancer Cell, 2002

Comparisons

- Multiscale nature

- Biology operates on many scales

- Same is true for electronics

- But electronics employ compartmentalization/abstraction to make understandable

- Component-wise understanding

- Only provides basic characterization

- Leads to “context-dependent” function

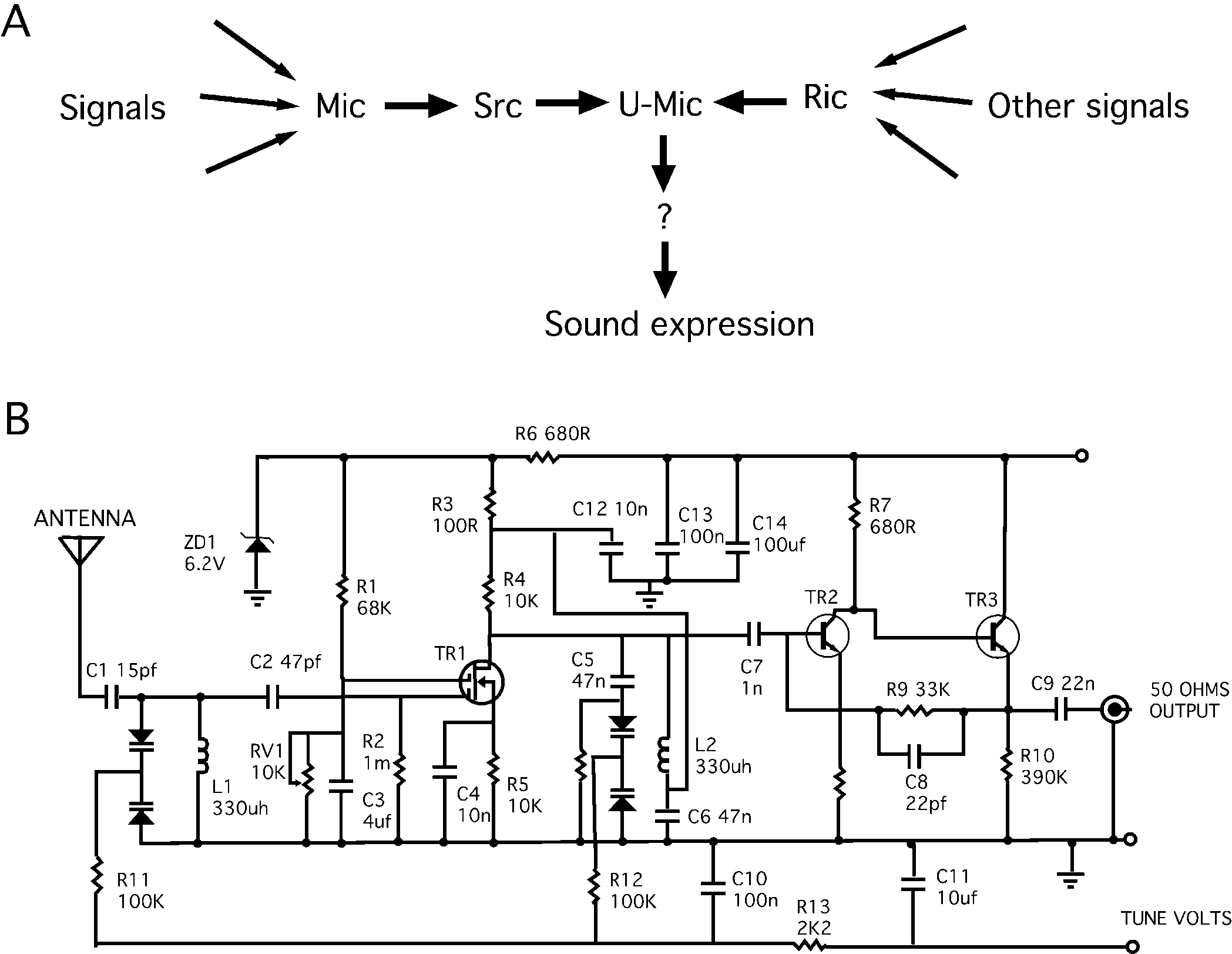

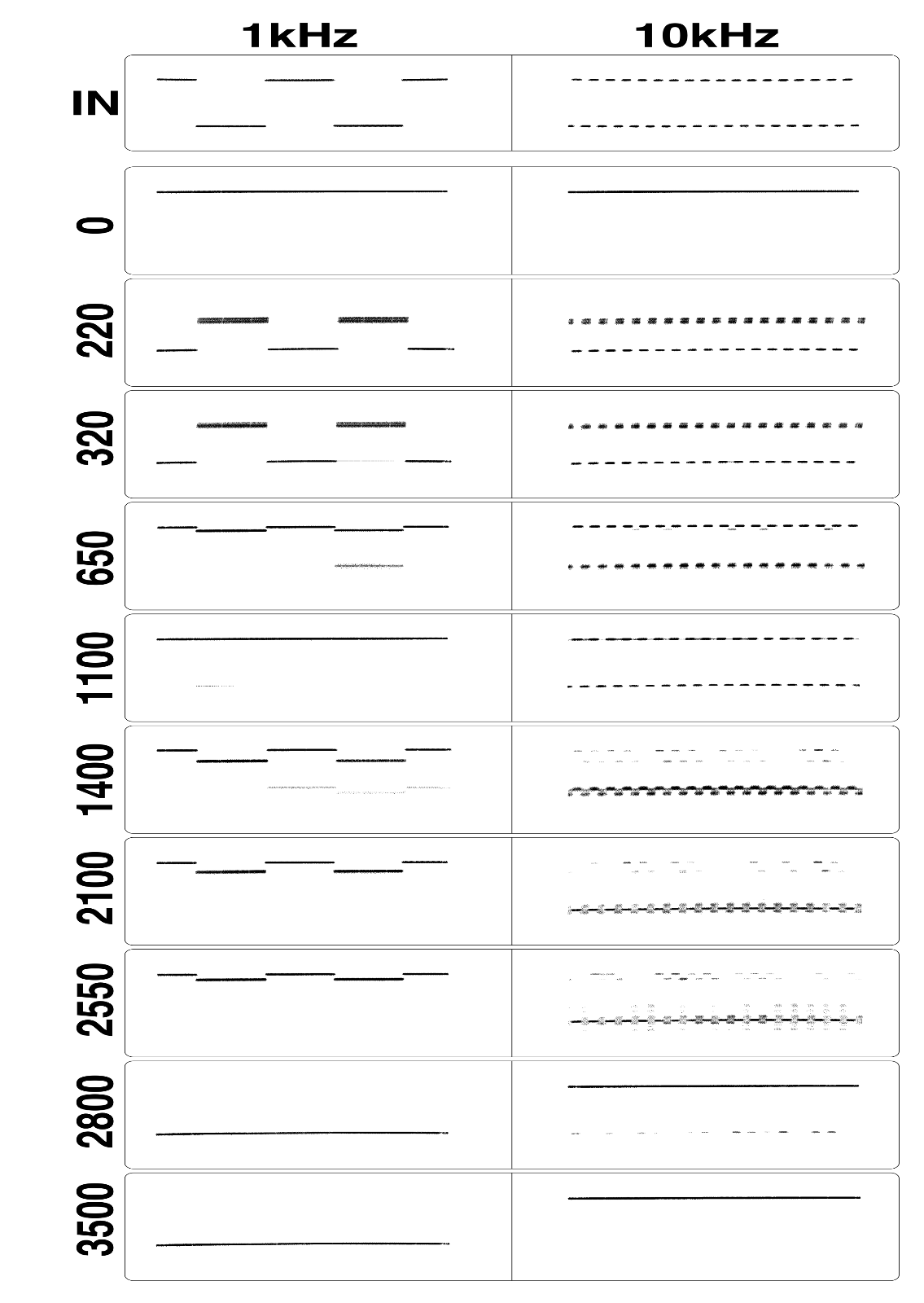

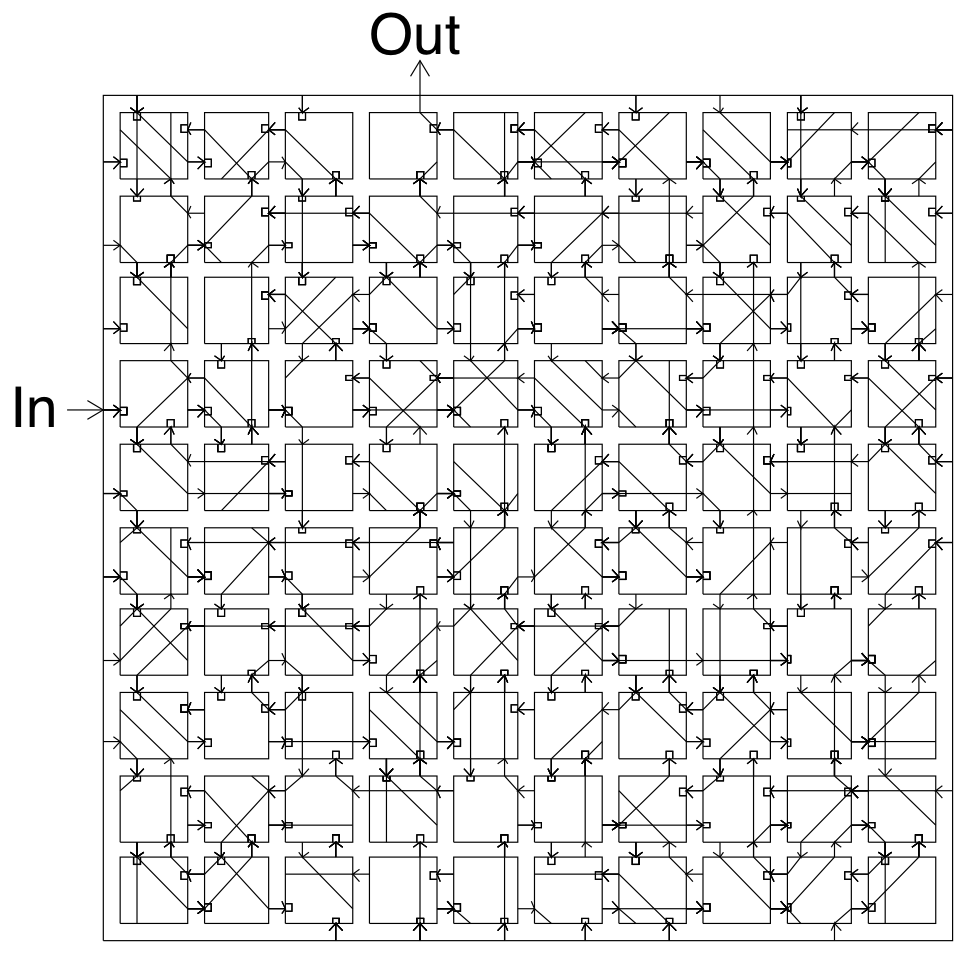

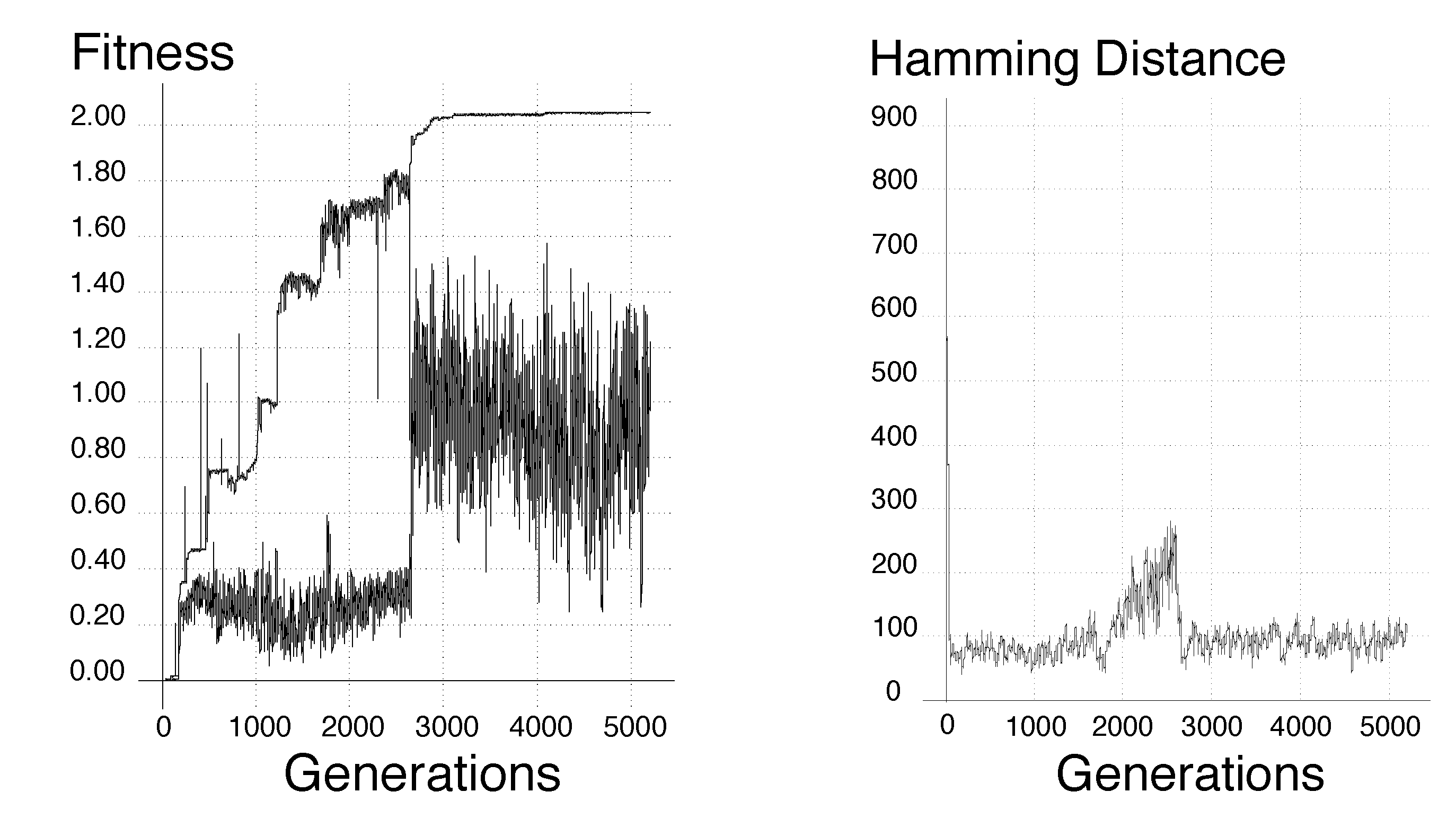

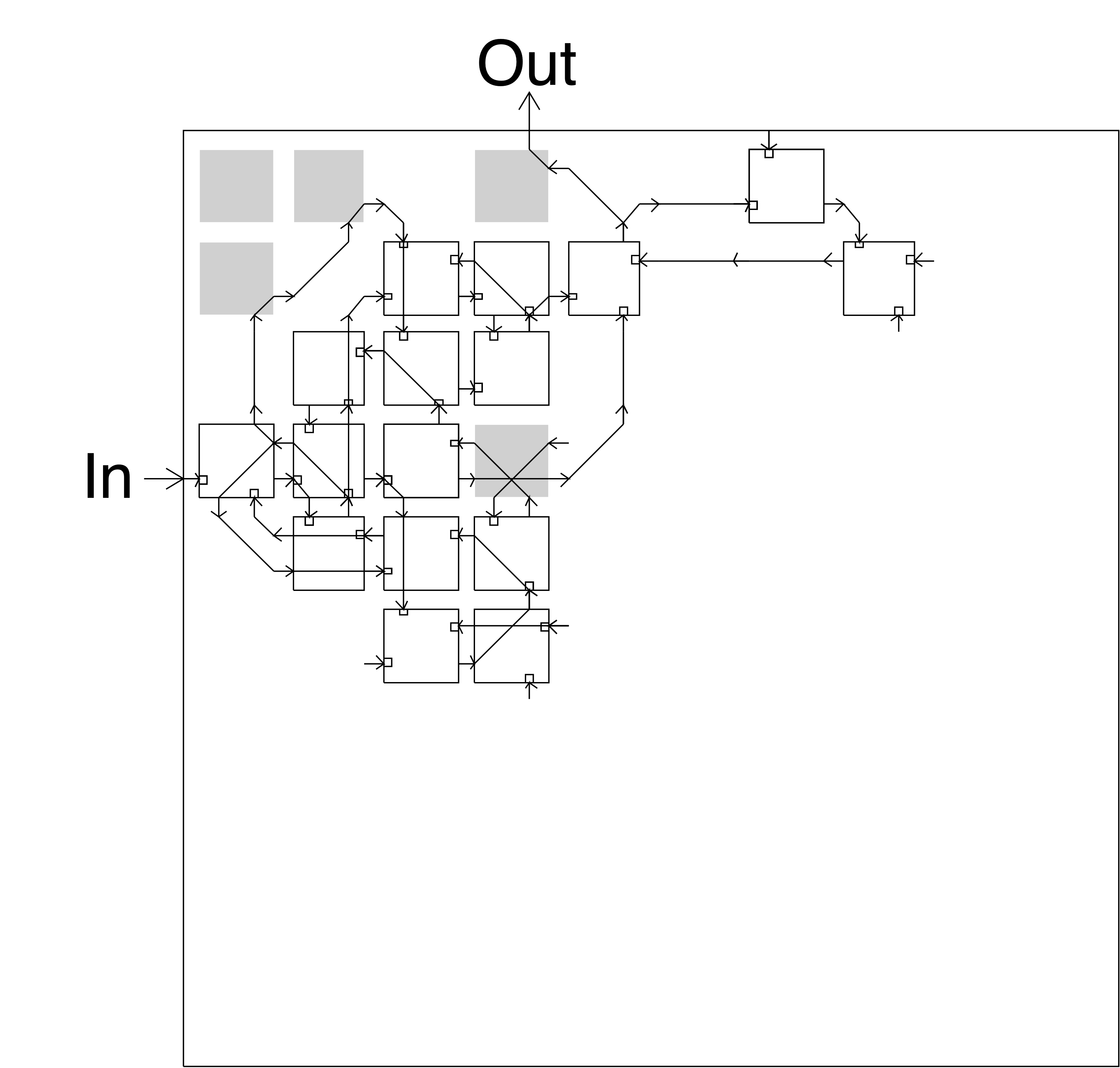

Machine learning outperforms humans for some tasks

Thompson et al. Proc. 1st Int. Conf. on Evolvable Systems, 1996

Machine learning outperforms humans for some tasks

Thompson et al. Proc. 1st Int. Conf. on Evolvable Systems, 1996

Machine learning outperforms humans for some tasks

Thompson et al. Proc. 1st Int. Conf. on Evolvable Systems, 1996

Machine learning outperforms humans for some tasks

Thompson et al. Proc. 1st Int. Conf. on Evolvable Systems, 1996

Basic Statistical Concepts

Data

- What is a variable?

- What is an observation?

- What is N?

Types of variables

- Categorical

- Numerical/continuous

- Ordinal

Probability

Coin toss example

A set of trials: HTHHHTTHHTT

Two possibilities:

- Fair coin (heads 50%, tails 50%)

- Biased (heads 60%, tails 40%)

Distributions

We’ve already been talking about these! Distributions describe the range of probabilities that exist for all possible outcomes.

Other Probabilities

- Conditional probability

- The measure of an event given that another event has occured.

- Marginal distribution

- The probability distribution regardless of other observations/factors.

- Joint probability

- In a multivariate probability space, the distribution for more than one variable.

- Complementary event

- The probability of an event not occuring.

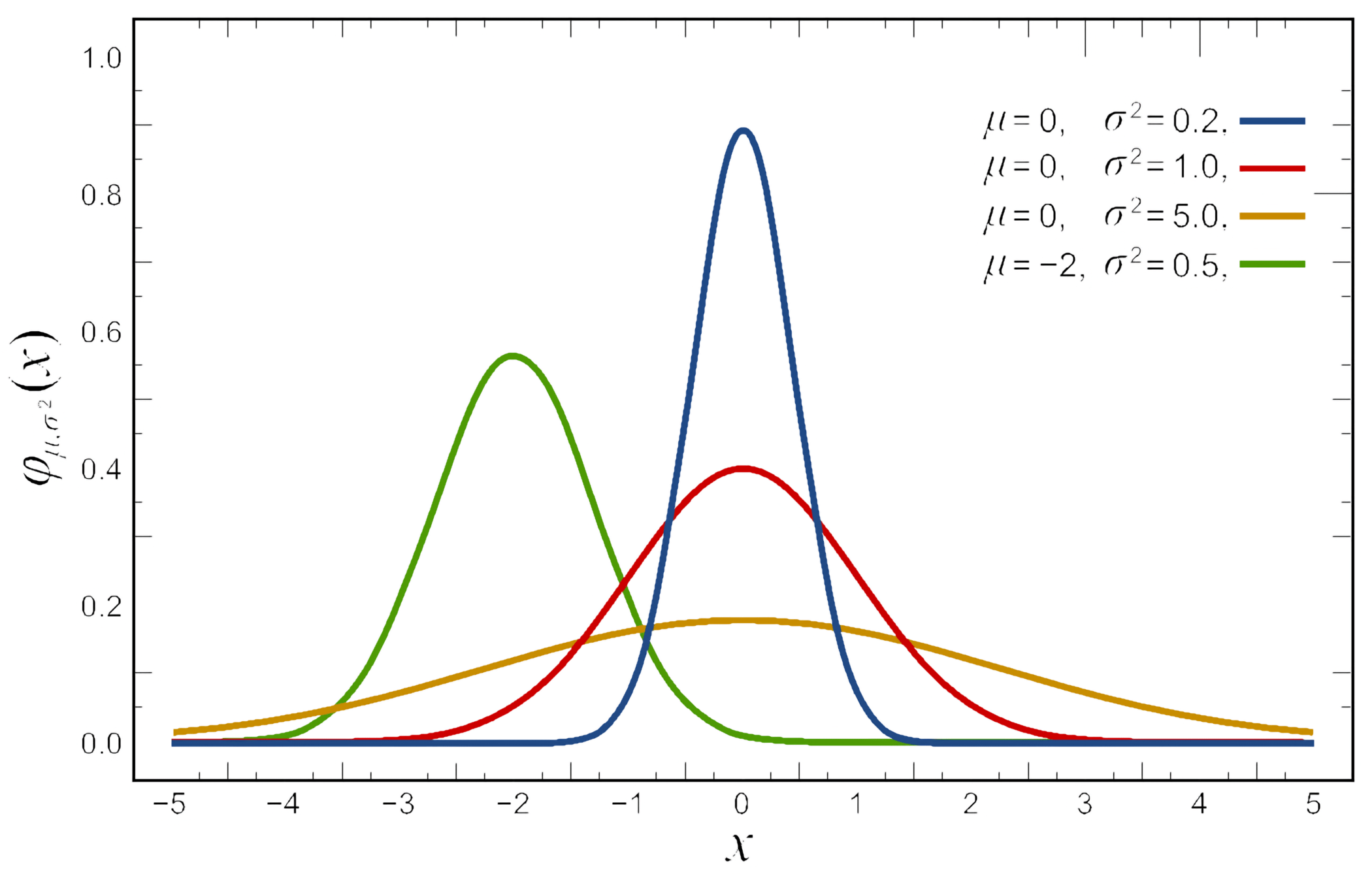

Normal Distributions

- \(\mu\): center of the distribution

- \(\sigma\): standard deviation

- \(\sigma^2\): variance

\[f(x)={\frac {1}{\sqrt {2\pi \sigma ^{2}}}}e^{-{\frac {(x-\mu )^{2}}{2\sigma ^{2}}}}\]

Normal Distributions

For a standard normal distribution (\(\mu = 0\), \(\sigma = 1\)):

\[f(x)=\frac{1}{\sqrt{2\pi}}\; e^{-\frac{x^2}{2}}\]

Area between:

- One standard deviation: 68%

- Two stdev: 95%

- Three stdev: 99.7%

You can normalize any normal distribution to the standard normal.

Other Distributions

- Normal Distribution

- Describes many naturally observed variables and has statistics mean and standard deviation.

- Exponential Distribution

- Describes the time between events in Poisson processes.

- Poisson

- Stochastic process that counts events in some time frame.

- Gamma

- Used in Bayesian statistics, often for modeling waiting times.

- Bernoulli

- Like a coin flip. Binary outcome.

- Binomial

- Describes the number of successes in a sequence of Bernoulli trials.

- Multinomial

- Generalization of Binomial for more than two states.

Distribution moments

The moments of a distribution describe its shape: \[\mu_{n}=\int_{-\infty}^{\infty}(x-c)^{n}\,f(x)\,\mathrm{d} x\]

- Mean (\(n=1\)), variance (\(n=2\)), skewness (\(n=3\)), kertosis (\(n=4\))

- Essential properties to determining how data will behave during analysis:

- How might your measurements need to change with changes in variance?

- What are these values for a normal distribution?

Sample statistics

Sample statistics

If we sampled a number of times (\(n=3\), say) many times, we could build a sampling distribution of the statistics (e.g., one for the sample mean and one for the sample standard deviation).

General properties of sampling distributions:

- The sampling distribution of a statistic often tends to be centered at the value of the population parameter estimated by the statistics

- The spread of the sampling distributions of many statistics tends to grow smaller as sample size \(n\) increases

- As \(n\) increases, sampling distributions tends towards normality. If a process has mean \(\mu\) and standard deviation \(\sigma\), them the sample mean (\(\mu\)) and the sample standard deviation (\(\sigma / \sqrt{n}\))

Sample statistics

- This means that as \(n\) increases, the better estimate \(\mu_x\) is of \(\mu\).

- Sample standard deviation is the standard deviation of the mean.

- When a population distribution is normal, the sampling distribution of the sample statistic is also normal, regardless of \(n\).

- And the central limit theorem states that the sampling distribution can be approximated by a normal distribution when the sample size, \(n\), is sufficiently large.

- Rule of thumb is that \(n=30\) is sufficiently large, but there are times when smaller \(n\) will suffice. Greater \(n\) is required with higher skew.

Hypothesis Testing

In hypothesis testing, we state a null hypothesis that we will test; if its likelihood is less than some value, then we reject it.

For example:

- \(H_0\): A particular set of points come from a normal distribution with mean \(\mu\) and variance \(\sigma\).

- \(H_0\): Two sets of observations were sampled from distributions with different means.

When we do an experiment, we typically take a sampling of several observations, to be more certain of the result.

T-distribution

When \(n\) is small use the t-distribution with \(n-1\) degrees of freedom.

- \(H_0\): Assume \(\mu=\mu_0\) then calculate t. \[t = \frac{\overline{x} - \mu_0}{s/\sqrt{n}}\]

- Can think of \(t\) designed to be \(z/s\), where it’s sensitive to the magnitude of the difference to the alternate hypothesis and scaled to control for the spread.

- When comparing the differences between two means: (null hypothesis the means are the same, variances/sizes assumed equal). \[t=\frac{{\bar{X}}_1-{\bar{X}}_2}{\sqrt{\frac{s_{X_1}^2+s_{X_2}^2}{n}}}\]

Effect size

- The scalar factor scales the t-value

- If normal data, the sample distribution variance scales as \(1 / \sqrt{n}\)

- p-values become significant even with small difference in means

- Exercise caution and report the effect size

- For example, a 1% or 50% difference in the means

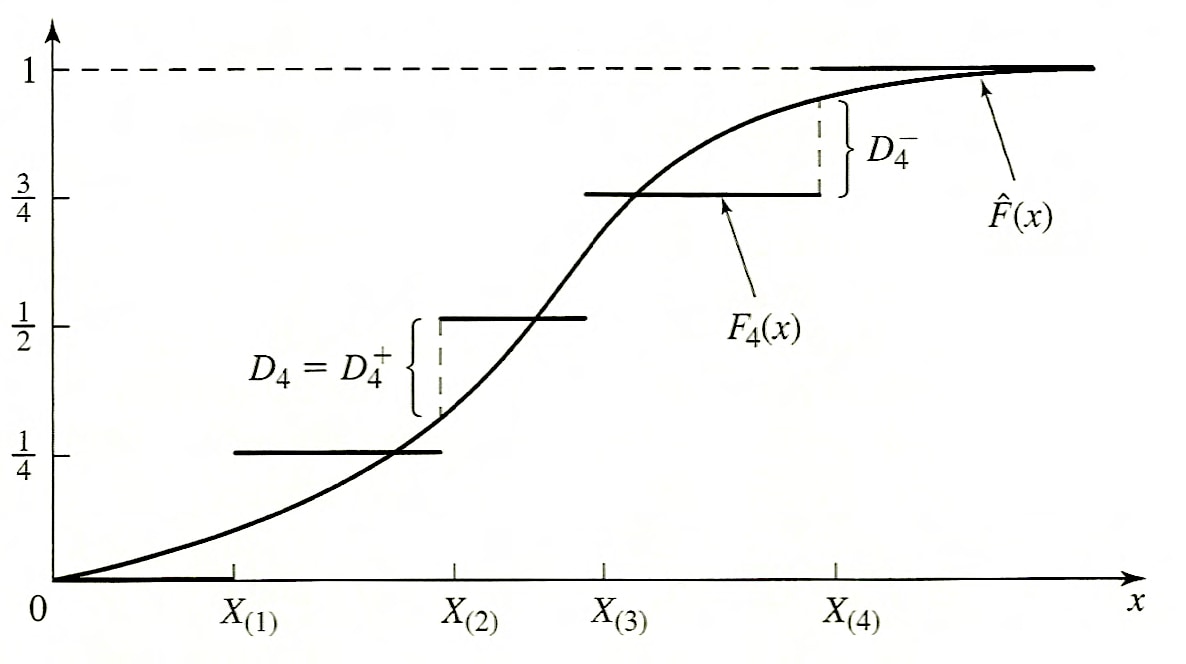

Kolmogorov-Smirnov Test

- Comparison of an empirical distribution function with the distribution function of the hypothesized distribution.

- Does not depend on the grouping of data.

- Relatively insensitive to outlier points (i.e., distribution tails).

Kolmogorov-Smirnov Test

- K-S test is most useful when the sample size is small

- Geometric meaning of the test statistic:

Kolmogorov-Smirnov Test Statistic

This is the operation that creates the sample distribution.

\[D_n^{+} = \max_{1\leq i\leq n} \left(\frac{i}{n} - \hat{F}(X_{(i)})\right)\] \[D_n^{-} = \max_{1\leq i\leq n} \left(\hat{F}(X_{(i)}) - \frac{i - 1}{n}\right)\] \[D_n = \max \left( D_n^{+}, D_n^{-} \right)\]

Not expressed in one equation with absolute value because distance is assessed from opposite ends for each.

How is this then converted to a p-value?

Graphical Analysis

Testing errors

- Type I error: error of rejecting \(H_0\) when it is true (false positive)

- Type II error: not rejecting \(H_0\) when it is false (false negative)

- Alpha: significance level in the long run \(H_0\) would be rejected this amount of the time falsely (i.e., we are willing to accept \(x\) fraction of false positives)

Beware of goodness-of-fit tests because they are unlikely to reject any distribution with little data, and are very sensitive to the smallest systematic error with lots of data.

Multiple hypotheses

We want to test whether the gene expression between two cells differs greater than chance alone. We test the two samples with a p-value cutoff of 0.05:

- How many false positives would we expect after testing 20 genes?

- How about 1000 genes?

What about false negatives?

What does this mean when it comes to hypothesis testing?

Reading & Resources

Review Questions

- What are 4 reasons to build a model?

- \(p(x) = a/x\) for \(1 < x < 10\) describes a distribution pdf. What is \(a\)? What is the expression for the distribution mean?

- What are the three kinds of variables? Give an example of each.

- You are interested in the sample distribution of the mean for an exponential distribution (N=8). What can you say about it relative to the original one?

- What can you say about the sample distribution for the N=1 case?

- Studying an anti-tumor compound, you create 2 tumors in either flank (i.e. 1 mouse gets 2 tumors) of 5 mice, then treat the animals with a compound, measuring tumor growth. What is N? Justify your answer.

- You want to model a process where each successive outcome is 1/5 as likely (e.g., getting 3 is 1/5 as likely as getting 2). What is the expression for this distribution?